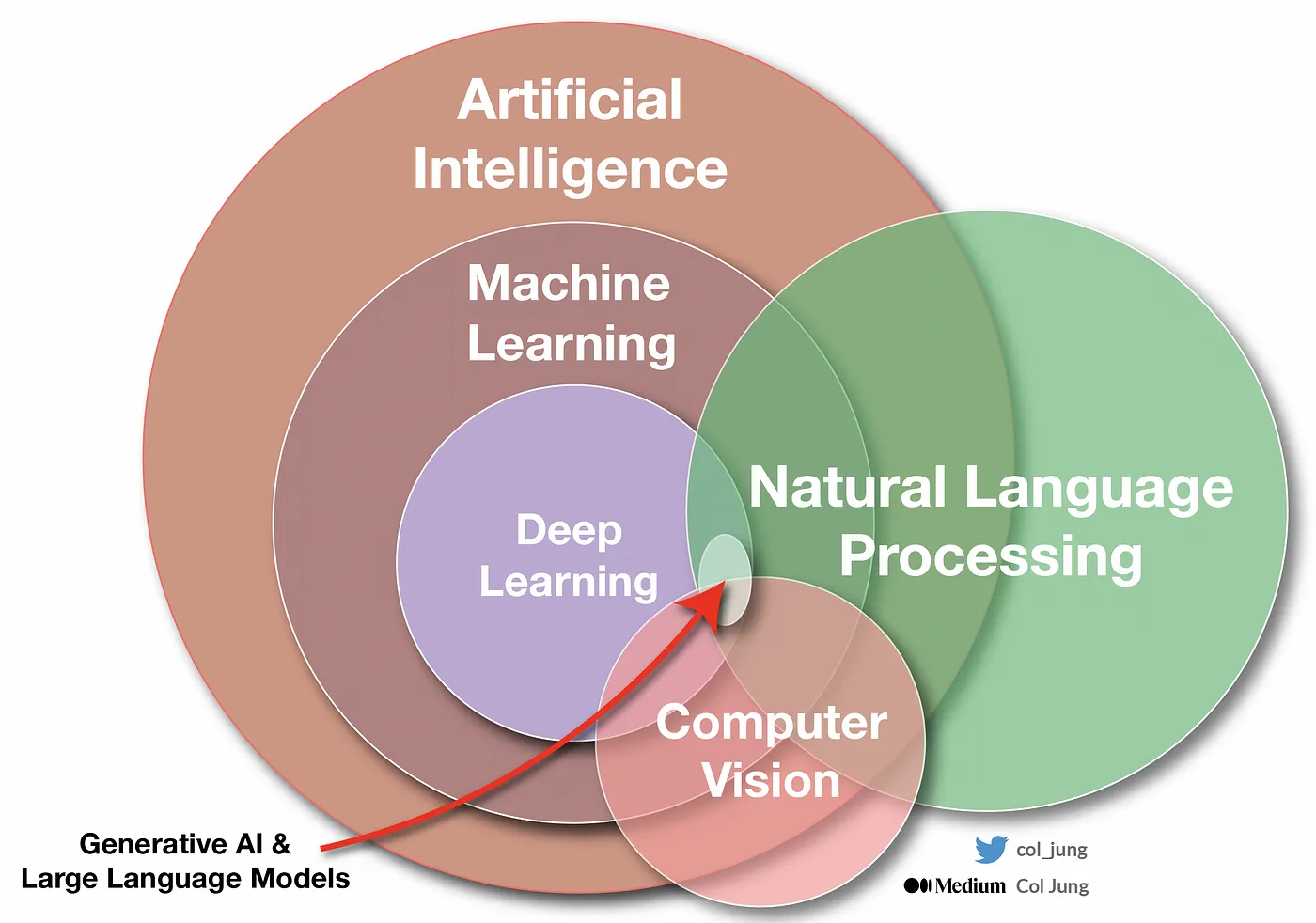

In the ever-evolving landscape of artificial intelligence and natural language processing (NLP), two terms that frequently emerge are NLP (Natural Language Processing) and Large Language Models. While they both revolve around the manipulation of human language, they serve different purposes, possess distinct capabilities, and contribute uniquely to the field of AI. This article delves into the disparities between NLP and Large Language Models (LLMs), providing insight into their characteristics and their roles in shaping the future of language-related technology.

The Nature of NLP

NLP, as the name suggests, is primarily concerned with processing and understanding natural language. It encompasses a wide array of tasks, such as language translation, sentiment analysis, text summarization, and question-answering systems. NLP systems aim to enable machines to comprehend and generate human language in a manner that is contextually relevant and meaningful. It plays a pivotal role in bridging the gap between human communication and machine interaction.

Large Language Models (LLMs)

Large Language Models are a specific subset of AI models that have gained prominence in recent years. Examples include GPT-3, BERT, and T5. These models are trained on massive datasets and are characterized by their capacity to generate coherent and contextually relevant text. LLMs are renowned for their ability to generate human-like text across various domains, making them versatile tools for a multitude of applications.

Data and Training

One of the key distinctions between NLP and LLMs is the nature of their training data. NLP models are trained on diverse datasets containing text from various sources like books, articles, and social media. This diversity allows NLP models to be adaptable and versatile in handling a wide range of language-related tasks.

LLMs, on the other hand, are trained on exceptionally large and domain-specific datasets. While they may have a broader understanding of language, their training data often focuses on specific domains or fields. This domain specificity makes LLMs excel in generating text relevant to those domains but less adept at tasks outside their training scope.

Applications

NLP applications are diverse and widespread, impacting numerous industries. Virtual assistants like Siri and Alexa, sentiment analysis for market research, and automated content generation all fall within the purview of NLP. Its versatility is a testament to its potential to streamline language-related tasks across various sectors.

LLMs, with their generative capabilities, have found applications in content generation, language translation, and creative writing. They have demonstrated remarkable text generation abilities, making them valuable for tasks requiring contextually relevant content generation, such as chatbots, automated content creation, and text completion.

Ethical and Privacy Considerations

Both NLP and LLMs have raised concerns related to ethics and privacy. NLP models can be manipulated to generate fake news or biased content, while LLMs can inadvertently reproduce biases present in their training data. Addressing these ethical and privacy concerns necessitates the implementation of strict guidelines, responsible usage, and continuous research in fairness and bias mitigation.

The Future of NLP and LLMs

The fields of NLP and LLMs are poised for continuous growth and development. NLP research aims to enhance natural language understanding, making human-machine interactions more seamless and meaningful. LLMs are expected to evolve with improved contextual understanding and domain-specific capabilities, expanding their applications across various industries.

In conclusion, NLP and Large Language Models are distinct yet interconnected facets of the AI and language processing landscape. NLP serves as a versatile tool for understanding and manipulating human language, while Large Language Models like GPT-3 and BERT demonstrate the potential of AI to generate coherent, contextually relevant text. Understanding these differences is vital for harnessing their respective strengths and addressing the unique challenges they present. Together, they contribute to the ongoing transformation of human-machine communication and information handling.

Demystifying NLP & LLM

Leave a Reply